The first, and likely the most essential lesson I was ever taught about data science was given to me by my father, Dr. Michael Agishtein, who rode into the world of data computing on the early waves of computational physics and geometry. He then transitioned to computational topography, and then, as is the end of many a physicist, to finance. When I was starting my data science career, he distilled his decades of experience to one simple statement: garbage in, garbage out. My father’s poetic remark spoke of the simple fact that a model is only as good as its input data, and good data is harder to find than many appreciate.

One of the bigger transitions I had to endure as I shifted from academia to industry was the proportion of my time spent collecting, cleaning, and formatting data. When you read most NLP (Natural Language Processing) research papers, you are rarely exposed to how the text that the model was run on was acquired. In the world of research, the data preprocessing is usually left to the “Oompa Loompas”, and the glory goes to the model. Yet, outside the academic chocolate factory, models are bought, but data is fought for.

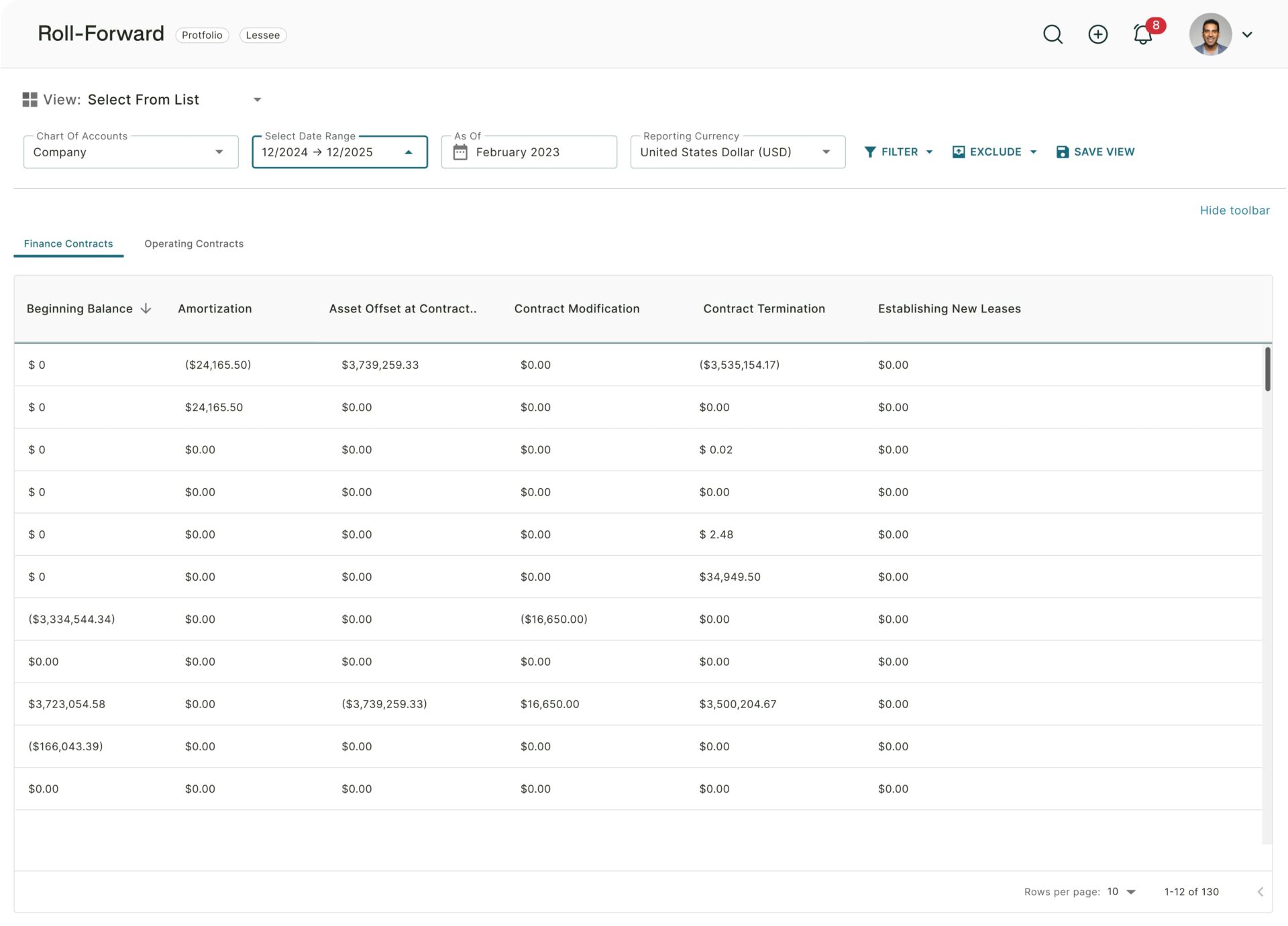

At Trullion, we are extracting insights from (often poorly scanned) PDFs, many with some handwriting on them, and at times even coffee stains (at least that is what I hope they are). Standard OCR (Optical Character Recognition) libraries work decently well on ideal, computer-generated PDFs, but their accuracy often degrades rapidly when run on scanned or blurry documents. Yet, here at Trullion, we value precision above all else, thus our advanced NLP models are useless if all we have is garbage in.

Since I have assumed my role here at Trullion, we have made it one of our pressing priorities to innovate on our current OCR capabilities. We are leveraging new and old research, together with some out-of-the-box thinking to create an innovative data processing pipeline. Yet, before we can claim any progress, we need to create a robust benchmark to measure our performance.

Generally, when doing text comparison, as in our scenario, comparing the text extracted by the OCR with the true contract text, our objective is to find out if these texts are similar. Yet the word ‘similar’ can mean different things to different people. My six-year-old son, for example, considers his candy pile ‘similar’ to his sister’s if, and only if, his pile is at least twice as large. In NLP research quantifying textual similarity as a metric is an essential task, and a lot of ink has been spilled developing a precise definition.

The common methods to compute textual similarity tend to coalesce around two distinct approaches. The first, edit distance metrics or the more modern cosine similarity on textual embeddings, but these approaches were not sufficient for our use case. What matters to us most is the word integrity; if a word has a wrong character then it is completely invalid.

Additionally, the metrics mentioned above are sensitive to white space: introduce some more white space in the file and the score changes. This is useful for many text similarity tasks, however, for our needs, our entity recognition engine is smart enough to ignore extra spacing. Text embedding models were not appropriate either as they introduce confounding effects by allowing for similar meanings, even when the words are different.

Our solution to this problem was to use a modified “Bag of Words” approach. The OCR text is compared to a ground truth text by running it through three separate metrics:

- Word Similarity

- Character Similarity

- Language Similarity

We wrote these metrics in-house, due to the lack of any currently published research to give us the necessary tools. The first metric compares individual word occurrence counts while ignoring stop words (common words like ‘if’, ‘the’, etc.). The second metric does something similar with the characters in the file, which provides a more granular view of the OCR performance to better tweak the hyper-parameters of our pre-processing tools. The final metric uses statistical methods to query a language dictionary to arrive at an estimate of the proportion of the contract made up of the target language.

Using these tools, we have been benchmarking and fine-tuning our proprietary OCR engine and we are excited to see its steady performance improvement. Stay tuned for our next conversation about our approach for low latency noise reduction!