Spell checkers have been making humanity look smarter since the late 1970s. Early versions would vet a text for mistakes by checking each word in a text against a stored dictionary. As time went on and research advanced, they began using various methods (word similarity metrics, n-grams among them) to suggest possible corrections. These techniques would search the dictionary for words that were ‘similar’ to the errant word, and then, using various statistical models, order, and even filter, the possibilities. Fast forward to modern times; deep learning, massive dictionaries, and personalized writing history have ushered in spell checkers that can just about read our minds.

With all the power of the modern age, spell checkers still often rely on humans to select the correct word from a list of possible options — to be the final arbiters of accuracy. A fully autonomous spelling correction engine, even after half a century, poses an insurmountable challenge. While some spelling errors are simple to correct, many are ambiguous or context-dependent. For example, take this sentence: “John drank so much beur he thought he could fly.” If we only consider word similarity, we can replace the word ‘beur’ with either ‘beer’ or ‘bear’. Both words are only one character away from the misspelled word. The word ‘bear’ may even occur far more frequently in English texts than ‘beer’.

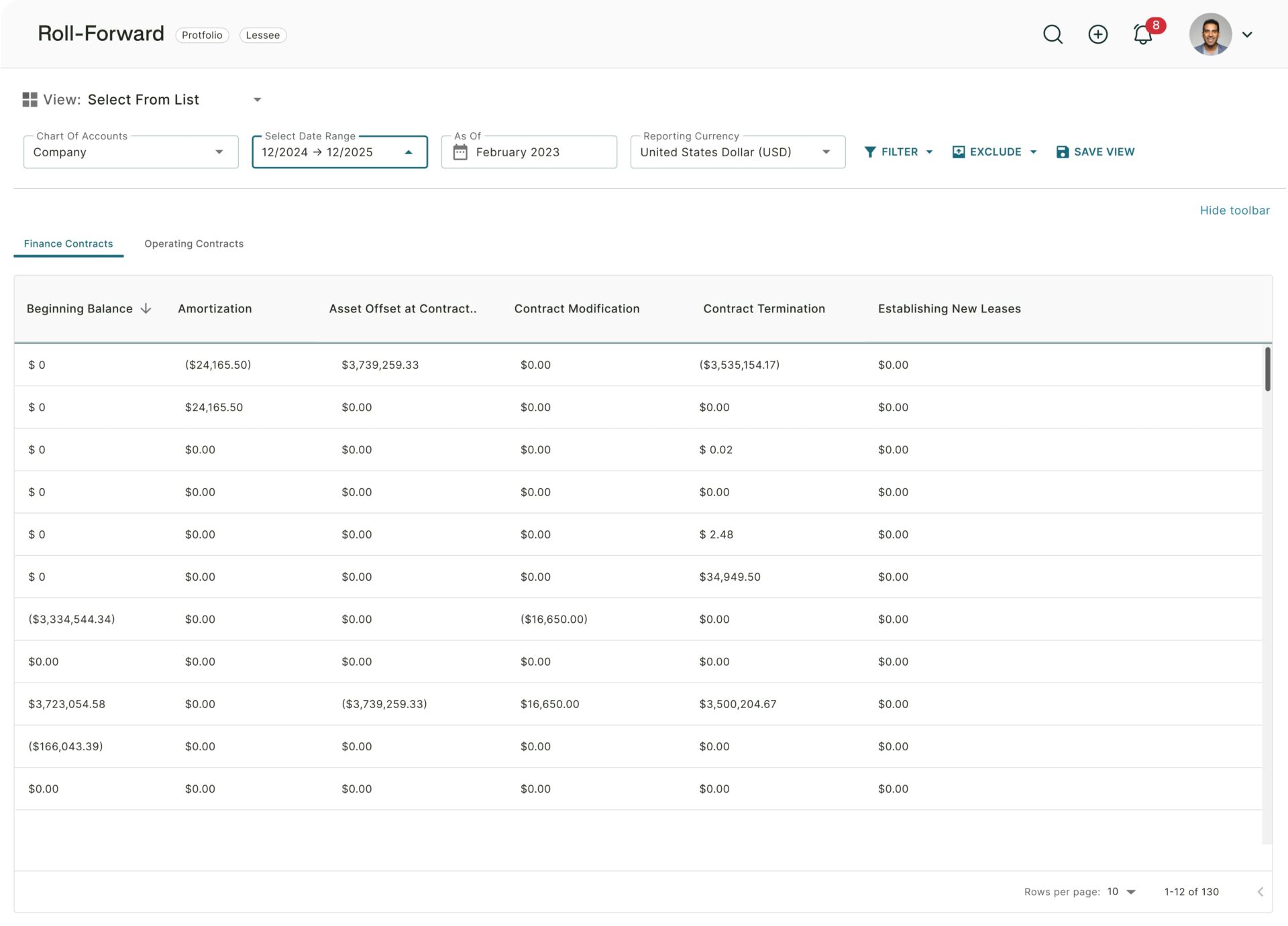

At Trullion, the AI team handles the ingestion, and parsing of unstructured PDFs. This task involves having the system ‘read’ the file and then extract the actionable data from the text. OCR (optical character recognition) engines are not perfect, and they allow mistakes to creep into the text. Even small textual errors, when they propagate downstream, can cause large problems. For example, if the word ‘Landlord” became “Lanbliod” we lose an important piece of data when searching for the Lease parties. To grapple with the issue, we decided to build an autonomous text correction engine. Yet, developing a fully autonomous system would mean the algorithm choosing from a collection of possible options with no human input. A human-free approach runs the risk of correcting incorrectly, possibly doing a great deal of damage. Thus, for our use case, post-OCR processing, we would need to develop an algorithmic method to choose safely (meaning adhering to the ‘do no evil’ adage).

If this problem isn’t interesting enough, our use case posed additional challenges. In general, spell-checkers deal with errors introduced by human users. These errors tend to follow specific, and in some sense, predictable patterns. These include – typographical errors (three -> there), cognitive errors (piece -> peace, too -> two) keyboard proximity errors (hello -> hrllo), among others. Understanding these patterns allows for human-specific solutions.

One approach to error correction on human-generated text is to develop a language translation model that is trained on a large dataset of human text, warts, and all. This approach leverages the patterns of human errors to generate a ‘translation’ from misspelled text to its correct counterpart.

OCR errors, on the other hand, are caused by a multitude of factors (scan quality, font type, text size, the computer’s emotional state) that are absent from human writing. For example, while humans confuse characters that are close to each other on the keyboard, OCR confuses those that look alike. Yet, which characters look similar can change based on font, noise level, text skew, etc. In addition, problems like mistaken spacing, random deletion/insertion, and spurious characters plague OCR systems in an entirely different way than their human counterparts.

To add another challenge into the mix: Trullion’s AI solution is a real-time system, forcing us to operate under strict time constraints. While checking if a word is in a dictionary does not increase with the size of the dictionary (this has to do with a concept called hashing), looking for similar words does. With a word dictionary containing a million words or so, searching through it, even if we are only doing it ten or so times becomes prohibitively inefficient. Any solution would have to speed up the dictionary look by a factor of ten or more.

To resolve the dictionary look-up problem i.e., minimizing the latency of looking through an enormous list for similar words, Moran Nechushtan, an algo-developer on Trullion’s A.I. team devised a devilishly clever preprocessing algorithm whose details are too dense to detail here. In very, and I mean very, broad strokes, Moran modified our existing dictionary by adding all possible word errors mapped to their true versions. Just doing such an approach naively would cause the dictionary size to explode, but Moran devised a method to encode all the errors in a minimal amount of space. Using this new, modified dictionary, the time needed for comparisons plummeted. His work led to blinding efficiency improvements in our error correction capabilities. Yet the challenge of resolving the multiple correction possibilities remained.

To adequately present our resolution, I need to digress a bit into the topic of language models. A language model is a mathematical tool to help incorporate the notion of context into language processing. While there are different types of models (statistical n-gram models, deep learning models, and others) we chose to use a model framework called BERT to generate context-aware numerical embeddings.

A numerical embedding is an encoding that takes a piece of text and maps it to a collection of numbers. These numbers have certain very powerful properties. Using them, we can now do some to introduce the concept of ‘similarity’ in a meaningful way. Consider the following two sentences “Moran drinks chocolate milk each afternoon”, and “Shlomo inhales buckets of coffee all day”. These sentences have very few similar words, yet their meaning seems very similar. If we were to encode these sentences into a collection of numbers and do a bit of linear algebra on them, we would see that they are close to each other mathematically.

With this in mind, we noticed the following fact as well. Given our original sentence “John drank so much beur he thought he could fly,” if we simply deleted the misspelled word, and compared the sentence without the word to the two competing sentences with the possibilities inserted, the context embedding would be much closer with the correct word, than with the alternate words. With this insight, and some linear algebra and probability tricks, we were able to harness the power of the model, without paying the expensive performance cost. When we ran it for the first time our results caused Moran to skip his afternoon chocolate milk.

Our algorithm has a long way to go before we can claim that we have conquered fully autonomous spelling correction, maybe it never will. However, this first step is already making an impact. Even with our ‘do no evil’ safeguards in place, the algorithm has improved our OCR accuracy enough (we save numbers for investors) that the data extraction pipeline has changed our ideas about what could be possible, and spurred a new burst of innovation in that area. Yes, this is still not a perfect system, yet when I read the logs and see what Moran’s algorithm can do I can’t help but be optimistic.